One of the most prevalent, and important technology made possible by Artificial Intelligence

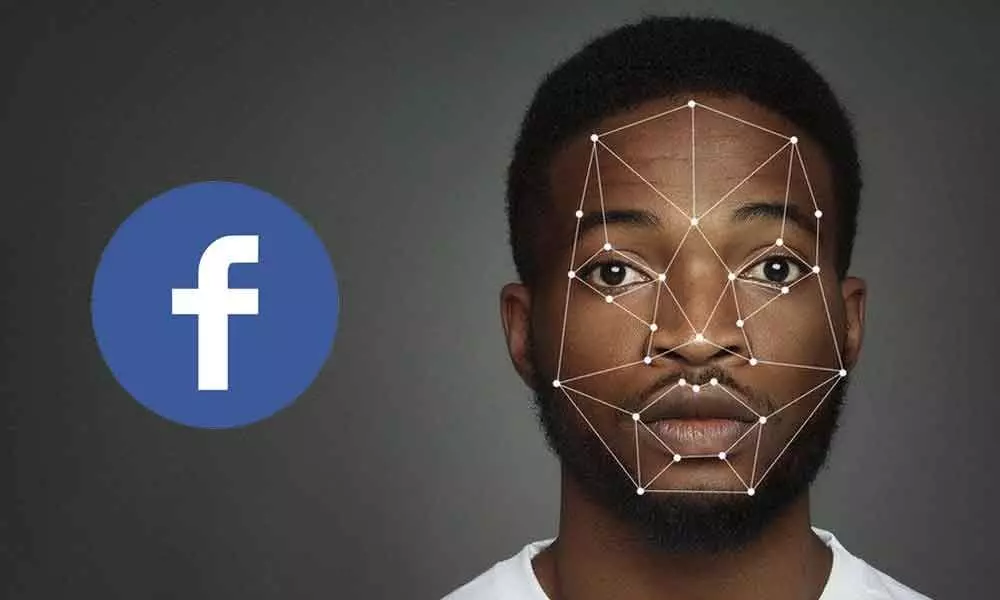

Read moreFacial recognition is a method of identifying and/or confirming an individual’s identity using their unique facial features. Facial recognition technology is extremely common in our everyday lives. It is used as a method of biometric identification, most commonly as a method to unlock your smartphone. Facial recognition is commonly used to identify individuals in images, videos, or even in real-time. The purpose of facial recognition is to identify different facial features, and to be able to individually recognize and identify different individuals. Facial recognition can identify a single person uniquely from a large ‘pool’ or dataset of faces, therefore being able to authenticate and verify a particular user. Facial recognition technology is used in many photo library applications. For example, Apple's ‘photos’ app, uses facial recognition technology to be able to identify individuals from your photo library, and to be able to sort by individual person. This is an extremely useful feature, which can make finding and sorting photos extremely simple. Machine learning technology is utilised in photo facial recognition to be able to detect people in extreme poses, people wearing face accessories, and people with occluded faces. Facial recognition utilises software and machine learning technology to be able to determine the similarity between stored facial data, and the current input of facial data, in order to be able to evaluate and/or verify a claim. Facial recognition’s sole purpose is to be able to successfully identify an individual’s facial features from a large input/dataset, and to be able to either authenticate, verify, or simply categorise this input. A very prevalent use-case of real-time facial recognition is in China, where there are an estimated 200-400 million facial recognition cameras currently active and recording data input. The Chinese communist party (CCP) states that these cameras are only being used as an initiative to ‘fight crime’, and to decrease shoplifting attempts.

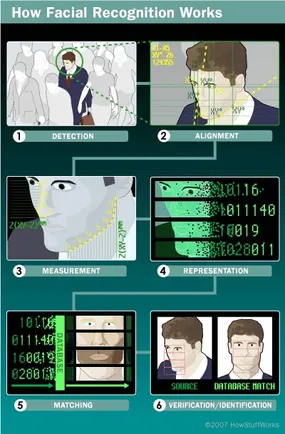

However, it is speculated that this is not the case, and rather it is used as a social hierarchy system, and a way of identifying where an individual is at any moment. Real-time facial recognition was also used in the height of the covid-19 pandemic, in a way to track Chinese residents during covid lockdowns. Facial recognition is a ‘branch’ of artificial intelligence (AI), as it learns from past data inputs, and therefore can identify individuals with an approximate 0.4% failure rate. Facial recognition uses AI algorithms and machine learning techniques to be able to detect faces. The algorithms normally begin by identifying key human facial characteristics, such as searching for human eyes, followed by eyebrows, nose, iris, mouth, and nostrils. The algorithm can then determine the overall measurements between these facial features and stores this information locally. This data is then used to compare input data, to be able to successfully verify the face input.

Considering that facial recognition is extremely common in our everyday lives, the general target audience of this technology can be companies who are intending to deploy and utilise this growing technology. Many people are subject to or utilise facial recognition technology very frequently, whether it is unlocking their phone, authenticating a purchase, or being the subject of a companies’ venture to track its users. Facial recognition technology is also beginning to become more common with law enforcement, where the technology can be used in emergencies such as when a suspected terrorist attack is imminent or underway, as this technology can drastically reduce the time needed by first responders and investigators to identify suspects and generate leads, therefore improving the efficiency and effectiveness of the operation. Many large companies have already begun experimenting and implementing high-performance facial recognition systems, whether it is to authenticate users, or to be able to distinguish between certain demographics of people and utilise this information to create more personalised advertisements.

This Artificial Intelligence relies on an array of different types of hardware. The most common, and one of the most essential components is the input device, which is commonly a camera system. This can be either a webcam-based system, mirrorless camera system, or a security-based camera system. Security-based camera systems are very common in real-time large-scale deployments, which are commonly used to monitor people, and to be able to track their movements, such as the previously mentioned case of China. However, this system comes with some minor disadvantages. While it is simple to deploy, it is not as accurate as a technology which utilises light projectors, dot projects, infrared cameras, proximity sensors, or ambient light sensors. For example, Apple’s ‘Face ID’ system utilises a combination of different kinds of light projects, and infrared cameras to be able to ‘map’ a three-dimensional depth-map of a user's face. This 3D technology is much more accurate and secure than utilising a single input device, such as a security camera system, or a web-cam system. In many cases, companies or countries wishing to track people’s movements can successfully do so utilising one input source, such as a security camera system, or web-cam system.

This is mainly due to the cameras systems still being able to identify unique/key

facial features of the users. However, in terms of utilising facial recognition technology for secure

authentication, and purchases, a multiple input system, such as Apple’s Face ID is preferred, as it provides a

multitude of extra security, as it is able to create a three-dimensional ‘map’ of your facial features, in

which it can verify that your facial structure is accurate in all dimensions, in comparison to a

two-dimensional approach (such as web-cam, or security camera systems), which is only able to identify an individual using a single position/frame of their face.

All this input data needs to be processed, and this can require somewhat large amounts of computing power,

depending on the number of inputs received at any given time. For example, the process power needed for

Apple’s Face ID is significantly lower than the processing power needed in a large-scale deployment such as

China’s. This is simply due to the overall number of probable inputs. Within China’s facial recognition

system, there can often be hundreds to thousands of unique inputs every second, which all need to be

individually processed, sub-sampled, mapped, and finally compared or uploaded to a database, either locally,

or off-site. In comparison, Apple’s Face ID system requires minimal computing power, as there is often only

one to ten inputs of data. This small amount of data is very easy for a system to process quickly, and

effectively, and since this data does not leave your device, it is often quicker than that of a large-scale

deployment, like China, other countries, and larger corporations. Overall, the key hardware components that

are essential for the basics of facial recognition include camera input, and a processing

unit/system/algorithm. More advanced systems, such as authentication facial recognition/biometric security

(i.e., Face ID), require sensors such as dot-projectors, light-projectors and infrared cameras. Depending on

the scale/complexity of facial recognition system/deployment, the amount of processing/computing power will

vary, with more computing power required as the number of unique input(s) increases.

to identify an individual using a single position/frame of their face.

All this input data needs to be processed, and this can require somewhat large amounts of computing power,

depending on the number of inputs received at any given time. For example, the process power needed for

Apple’s Face ID is significantly lower than the processing power needed in a large-scale deployment such as

China’s. This is simply due to the overall number of probable inputs. Within China’s facial recognition

system, there can often be hundreds to thousands of unique inputs every second, which all need to be

individually processed, sub-sampled, mapped, and finally compared or uploaded to a database, either locally,

or off-site. In comparison, Apple’s Face ID system requires minimal computing power, as there is often only

one to ten inputs of data. This small amount of data is very easy for a system to process quickly, and

effectively, and since this data does not leave your device, it is often quicker than that of a large-scale

deployment, like China, other countries, and larger corporations. Overall, the key hardware components that

are essential for the basics of facial recognition include camera input, and a processing

unit/system/algorithm. More advanced systems, such as authentication facial recognition/biometric security

(i.e., Face ID), require sensors such as dot-projectors, light-projectors and infrared cameras. Depending on

the scale/complexity of facial recognition system/deployment, the amount of processing/computing power will

vary, with more computing power required as the number of unique input(s) increases.

Facial recognition, a branch of Artificial Intelligence (AI), is often used in biometric authentication/security, or is used to simply identify individuals. The software that powers facial recognition must be extremely reliable and must run flawlessly in order to provide a near perfect level of accuracy and overall security. A lower level of accuracy can be detrimental to a device's security, as malfunctioning code/algorithms can potentially allow third parties access to people’s personal information, accessible from devices that utilise facial recognition, such as smartphones. Various algorithms are utilised by all types of facial recognition, which can identify facial features by extracting/interpreting ‘landmarks’ from an individual's face, and can analyse the relative size, position and size/shape of eyes. Essentially, these algorithms read the geometry of your face, whether it is through a two-dimensional, or three-dimensional camera array/system. One of the most used facial recognition algorithms is the Viola-Jones algorithm, which has a very high accuracy and high detection rate, as well as being highly efficient/fast to process inputs. This algorithm is an object detection framework, and was initially developed in 2001 by Paul Viola, and Michael Jones. One key limitation of this searching algorithm is that it is solely designed for frontal inputs, meaning that the input data must be directly pointed at the camera/input device. This means that this algorithm cannot determine/verify an input to a matching database image effectively if the user/input is looking sideways, upwards, or downwards. This algorithm has two main stages that have allowed it to be adapted and utilised in so many facial recognition deployments/applications. The first stage is ‘Training’, which comes before the second stage ‘Detection’. The ‘training’ stage is somewhat simple to understand.

It involves training the machine to be able to identify different facial features, such as nose, ears or eyes. Training involves ‘feeding’ the machine large amounts of varying information/data, and therefore allowing it to be able to recognize facial features, and to be able to differentiate between different inputs, thus resulting in a complete training of the AI model. The second stage, ‘detection’, allows the facial recognition software to detect unique features, therefore being able to distinguish between people. As previously mentioned, this model in its original state was limiting, as it was designed for frontal image inputs. However, many companies have adopted this algorithm, and modified it significantly, and included more inputs, allowing for this model to work significantly well when faced with difficult image inputs, such as slanted photos, and angled inputs.

One of the key components to the development of facial recognition

software/technology is sufficiently trained and adequate numbers of personnel within a company aiming to

produce, implement, or deploy facial recognition technology. There is a large range of personnel that are

essential to the accuracy, development, and overall security of facial recognition technology. For example,

adequately trained and enough software engineers are essential to the development and implementation of facial

recognition. These software engineers are crucial to the success of the product and are key to ensuring that

the technology will be reliable, and secure overall. Other essential roles include data scientists, whose key

purpose is to be able to identify and capitalise on patterns within data inputs, in which they can assist

software engineers in training AI models, and therefore enabling the company and/or project to be successful.

Personnel are a key component of any application of AI. Within the development of Facial Recognition

technology, specialised and highly trained personnel are essential to enforcing the security of the

technology, improving the quality of the technology, and improving the overall reliability of the product.

Specialised departments and personnel such as a biometrics team are key to the implementation of a successful

facial recognition technology. A biometrics specialist, or specialist team aims to improve the reliability of

the product/technology by recognising/extracting new and reliable ‘landmarks’ from the data input and using

this information to improve the security of the product in general. Biometrics teams also develops encryption

algorithms/models which are vital to the security of the technology/product and can be the key differentiating

factor between complete facial recognition security, (i.e.: processed on device, no external processing

needed), or limited facial recognition security, (i.e.: processed externally, therefore limited data

security).

One of the key components to the development of facial recognition

software/technology is sufficiently trained and adequate numbers of personnel within a company aiming to

produce, implement, or deploy facial recognition technology. There is a large range of personnel that are

essential to the accuracy, development, and overall security of facial recognition technology. For example,

adequately trained and enough software engineers are essential to the development and implementation of facial

recognition. These software engineers are crucial to the success of the product and are key to ensuring that

the technology will be reliable, and secure overall. Other essential roles include data scientists, whose key

purpose is to be able to identify and capitalise on patterns within data inputs, in which they can assist

software engineers in training AI models, and therefore enabling the company and/or project to be successful.

Personnel are a key component of any application of AI. Within the development of Facial Recognition

technology, specialised and highly trained personnel are essential to enforcing the security of the

technology, improving the quality of the technology, and improving the overall reliability of the product.

Specialised departments and personnel such as a biometrics team are key to the implementation of a successful

facial recognition technology. A biometrics specialist, or specialist team aims to improve the reliability of

the product/technology by recognising/extracting new and reliable ‘landmarks’ from the data input and using

this information to improve the security of the product in general. Biometrics teams also develops encryption

algorithms/models which are vital to the security of the technology/product and can be the key differentiating

factor between complete facial recognition security, (i.e.: processed on device, no external processing

needed), or limited facial recognition security, (i.e.: processed externally, therefore limited data

security).

Some of the earliest people involved in the development of facial recognition were Woodrow Bledsoe, along with Helen Chan and Charles Bisson of Panoramic Research, Palo Alto, California. This early work involved the manual marking or measuring of distances between various landmarks on the face such as eye centers, mouth, nose positions, etc. These early attempts were labelled man/machine made as the human had to extract the positions for the features that would be used to recognise the face. This had to be done manually for each photograph. The computer would then use this to compare with a subject to determine if they were a match.

The lack of a large data base of photographs and the associated data needed for each subject rendered this early research unsuitable for use in real world applications. Greater amounts of data, computer power and software applications were needed.

In 1971, Goldstein, Harmon, and Lesk published an article in Computer Science detailing the work they did to include 21 specific subjective markers, such as hair colour and lip thickness, to automate facial recognition. This gave more accurate results, however the data still had to be manually extracted and was extremely labour intensive.

In the 1980’s the development of Facial Recognition software became viable when Sirovich and Kirby of Brown University, Rhode Island began applying linear algebra to the problem. This system became known as Eigenface, and was the first time the computer, using this new software could analyse facial features and take measurements itself to compare with stored images. It did away with the laborious process of humans having to do this one image at a time.

In the 1990’s the US Defence Advanced Research Projects Agency (DARPA) started the Face Recognition Technology (FERET) programme to encourage the commercial facial recognition market. The project involved creating a database of facial images. The idea was that a large database of images for facial recognition would lead to improvements and result in more powerful facial recognition technology.

How quickly a computer can process an image when trying to match it to another in a large data base is in effect determined by factors such as computational power available, size of the data base and speed of remote connections between computers.

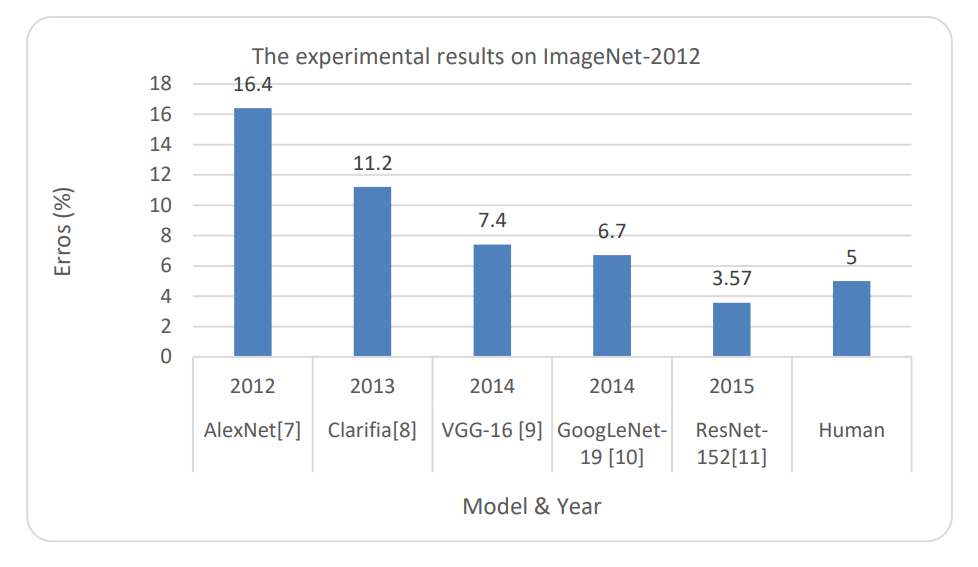

For example Moores Law says that the number of transistors per unit of surface doubles every 2 years. This means that raw computing power follows an exponential curve. The exponential growth in these abilities has led to faster and more accurate facial recognition software algorithms. ImageNet is essentially an open sourced dataset that can be used for machine learning research. There is a yearly challenge that exists to evaluate the ability of algorithms to correctly classify images within its database. The huge advances in image processing has led to unrivalled levels of accuracy that we have today. In 2015 AI facial recognition algorithms became more accurate than humans at identifying facial images.

Earlier facial recognition programs used 2D images to compare with another 2D image from a database. These were very limited as the subject needed to be looking almost straight at the camera, and the lighting needed to be almost identical in both. The slightest difference in either could lead to a high rate of failure.

A graph depicting facial recognition and errors over time. Source

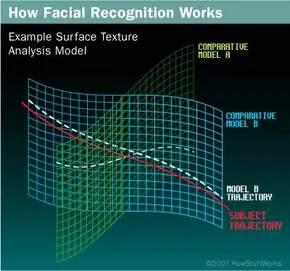

A recently emerging trend uses a 3D model which is far more accurate. It uses distinctive features of the

face, for example where skin is taught over a rigid bone as would be found around the eye socket or nose and

chin. Using depth as an extra axis of measurement provides the ability to detect a subject at different view

angles, up to 90 degrees(a side on profile). With 2D detection a maximum angle of 35 degrees from the camera was

very limiting. Once it detects a face, 3D facial recognition software determines the head's position, size and

angle to the camera. It then measures the curves of the face using sub-millimetre or microwave scale to create a

template. The software then translates the template into a unique code, using sets of numbers to represent the

various features on the persons face. Importantly, it is also backward compatible with 2D photos and uses an

algorithm to compare the 2D and 3D images.

Woodrow Bledsoe

Brown University, Rhode Island

Eigenface system

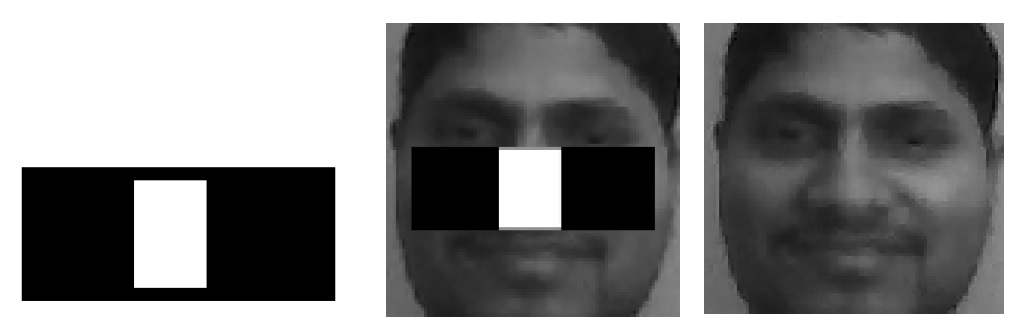

More recent developments use skin biometrics, that is the uniqueness

of skin texture, to give much more accurate results.

It uses a process of surface texture analysis to take a

picture of a patch of skin and break it into small blocks. The software can

then distinguish lines, pores and skin texture to give a degree of accuracy

that will allow it to even tell the difference between identical twins.

Just a few years ago, facial recognition technology was a boom area of the technology sector. Facebook was using it to pick out faces in crowds and give the ability to users to tag their face in other posts. Microsoft is currently building the largest ‘face database’ in the world. Amazon was using it to win contracts from law enforcement agencies across the United States.

This has had the effect of massively increasing the development of the technology leading to an exponential growth in the accuracy and speed of this form of Artificial Intelligence. This has led to grave concerns about the privacy implications of the technology and has recently led Facebook to announce that it would be scrapping all facial recognition from its platform and deleting the database of stored images.

In general, Artificial Intelligence and specifically facial recognition can present

many social and ethical concerns for both developers, and consumers. One of the most important and prevalent

ethical implications of facial recognition technology is the user/consumer’s access to their data and ensuring

that their data is kept private and safe. As previously mentioned, various deployments of facial recognition

technology process the input data either locally (i.e.: on device, no external contact), or externally (i.e.:

cloud processing). The latter, external processing raises several concerns about the privacy and the right for

the user/consumer’s access to their data and maintaining their anonymity. As also previously mentioned, various

social media companies, such as Facebook utilise facial recognition technology to be able to process and ‘tag’

users in your photo(s)/posts automatically. Whenever a user posts/uploads content to a social media site such as

Facebook or Instagram, the content is owned by that social media platform. This means that when you upload an

image to Facebook, the platform has ownership over that specific content on their platform. While this may not

seem like an issue, there can be several ethical implications as a direct result of this. For example, when you

upload an image to Facebook, their facial recognition technology scans and analyses the person(s) in the image

and stores this data on their servers. This can make it extremely hard to remain in control of your online

identity, and since you do not own your uploaded content on these platforms, they can theoretically control this

saved facial recognition data. This generates ethical issues such as one’s access to their personal data, and

results in very limited control over one’s online presence.

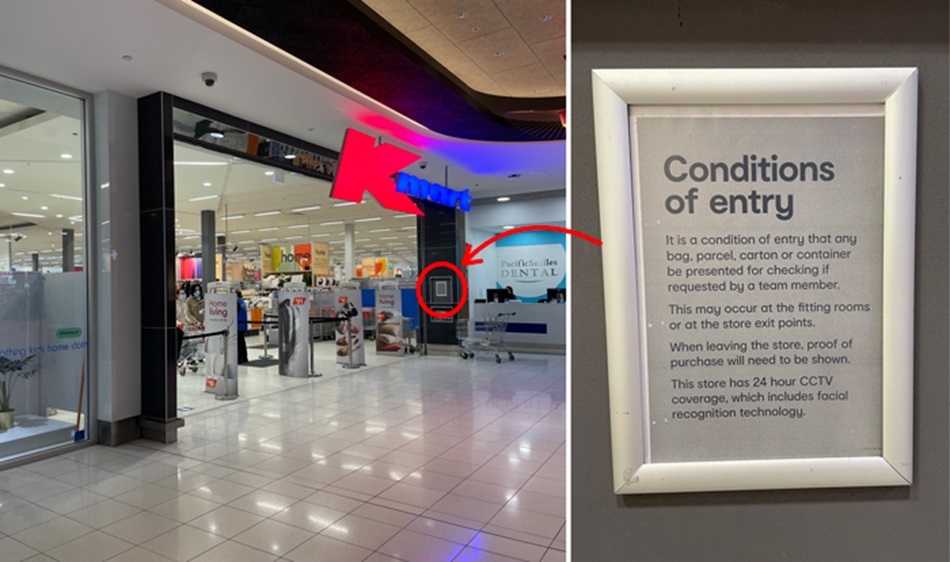

Another key and extremely important ethical issue of facial recognition is that this technology is often deployed with limited or no notification or consent of the target audience. For example, Bunnings Warehouse, and Kmart utilised facial recognition technology by using their pre-existing CCTV cameras in various stores. A spokesperson from Bunnings claimed that it was only used to “...help identify persons of interest who have previously been involved in incidents of concern in our stores.”. While both Bunnings and Kmart did specify that they were using this technology, over 79% of consumers were not aware that this was the case. As shown in this image below, the signage at a Kmart store in Marrickville was using this technology. However, this signage is purposely placed out of the direction of travel of the consumer, and with the intention of it not being read in a real-world situation, such as a large shopping complex/location. The concerns of data privacy refer to a user’s right to being able to know where, why, and who is accessing their facial recognition data. With the above example of Bunnings Warehouse, utilising facial recognition within their stores, consumers are beginning to worry about what was happening to this data.

Was it only being used for ‘identifying theft’, or was it being sold commercially? Some reports claim that Kmart, utilising their huge facial feature database, had made sell positions on an online global data bank. These websites/platforms are similar to Acxiom, one of the worlds’ largest online data retailers, targeted at big corporations to ‘better understand their customers’. In terms of social issues, there are not too many that can possibly arise with the technology of facial recognition. However, as people are becoming more aware and informed about this emerging technology, and its potential for loss of privacy, and an increased cooperation/government involvement, consumers are beginning to lose trust in both large companies and sometimes even governments. Within China, and their huge network of facial recognition CCTV cameras, there is an increasing number of people who are becoming more and more sceptical of their government’s decisions. This social issue has the potential to be very significant, as a loss of trust in a country's government can generate disastrous outcomes, such as security riots, security breaches, increased public attempt of anonymity, and a lack of trust in government systems. Overall, these social and ethical issues/concerns can impact the future of this AI, facial recognition. These key issues have the potential to impact people’s overall perception of this emerging technology, and potentially their perception on the term ‘Artificial Intelligence’. There needs to be adequate measures to ensure that these previously mentioned issues are contained and controlled. If not, these issues have the potential to severely disrupt the development and even the future of AI. Take a look at this short video below from CHOICE.

In conclusion, facial recognition technology, made possible by Artificial Intelligence,

has the potential to be extremely useful in our everyday lives, if developed, controlled and maintained

correctly. As mentioned previously, the type of hardware, software and personnel needed to develop, test, and

deploy facial recognition can be extensive, but still achievable, if executed correctly. However, current

limiting factors such as computing power have the potential to possibly slow the overall processing of facial

recognition input data, when in a situation such as scanning a crowd of over 30,000 people. However, it is

likely that this will not be a relevant issue, since as time progresses, computers become more powerful

(according to Moore’s Law), therefore being able to keep up with increasing facial recognition input data.

Without past contributions from significant individuals such as Woodrow Bledsoe, the current state of facial

recognition technology would simply not have been possible. Without the methods to identify people by

‘subjective markers’, developed by Goldstein, Harmon, and Lesk, the current state of facial recognition would

not have developed so quickly. Overall, the technology of facial recognition will significantly benefit society,

if significant issues such as security are contained.